The management team at a company with a large on-premises OpenStack environment wants to move non-production workloads to AWS. An AWS Direct Connect connection has been provisioned and configured to connect the environments. Due to contractual obligations, the production workloads must remain on- premises, and will be moved to AWS after the next contract negotiation. The company follows Center for Internet Security (CIS) standards for hardening images; this configuration was developed using the company's configuration management system.

Which solution will automatically create an identical image in the AWS environment without significant overhead?

Correct Answer:

D

https://www.brad-x.com/2015/10/01/importing-an-openstack-vm-into-amazon-ec2/ https://aws.amazon.com/ec2/vm-import/

A DevOps Engineer must automate a weekly process of identifying unnecessary permissions on a per-user basis, across all users in an AWS account. This process should evaluate the permissions currently granted to each user by examining the user's attached IAM access policies compared to the permissions the user has actually used in the past 90 days. Any differences in the comparison would indicate that the user has more permissions than are required. A report of the deltas should be sent to the Information Security team for further review and IAM user access policy revisions, as required.

Which solution is fully automated and will produce the MOST detailed deltas report?

Correct Answer:

C

An ecommerce company is running an application on AWS. The company wants to create a standby disaster recovery solution in an additional Region that keeps the current application code. The application runs on Amazon EC2 instances behind an Application Load Balancer (ALB). The instances run in an EC2 Auto Scaling group across multiple Availability Zones. The database layer is hosted on an Amazon RDS MySQL Multi-AZ DB instance. Amazon Route 53 DNS records point to the ALB.

Which combination of actions will meet these requirements with the LOWEST cost? (Select THREE.)

Correct Answer:

AEF

A company is using an AWS CodeBuild project to build and package an application. The packages are copied to a shared Amazon S3 bucket before being deployed across multiple AWS accounts.

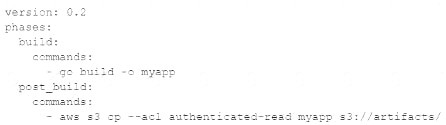

The buildspec.yml file contains the following:

The DevOps Engineer has noticed that anybody with an AWS account is able to download the artifacts. What steps should the DevOps Engineer take to stop this?

Correct Answer:

D

A company has deployed several applications globally. Recently, Security Auditors found that few Amazon EC2 instances were launched without Amazon EBS disk encryption. The Auditors have requested a report detailing all EBS volumes that were not encrypted in multiple AWS accounts and regions. They also want to be notified whenever this occurs in future.

How can this be automated with the LEAST amount of operational overhead?

Correct Answer:

C

https://aws.amazon.com/blogs/aws/aws-config-update-aggregate-compliance-data-across-accounts-regions/ https://docs.aws.amazon.com/config/latest/developerguide/aws-config-managed-rules-cloudformation-templates